About Me

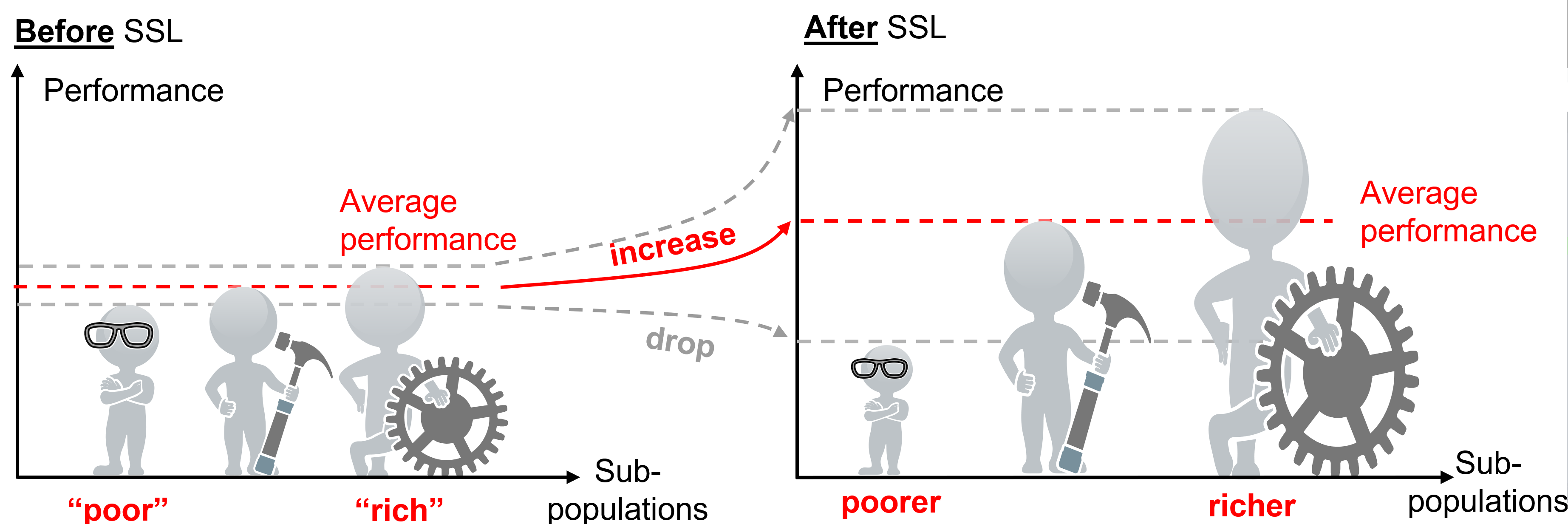

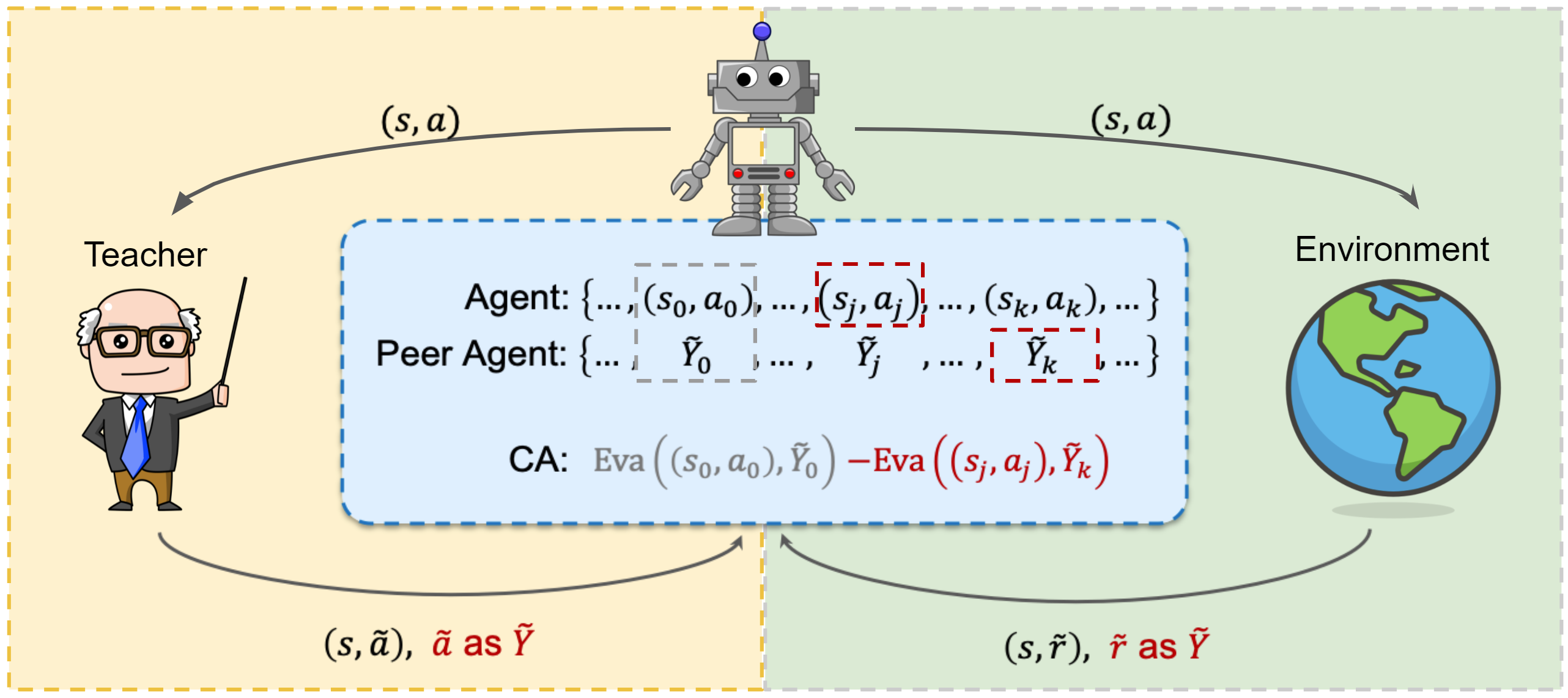

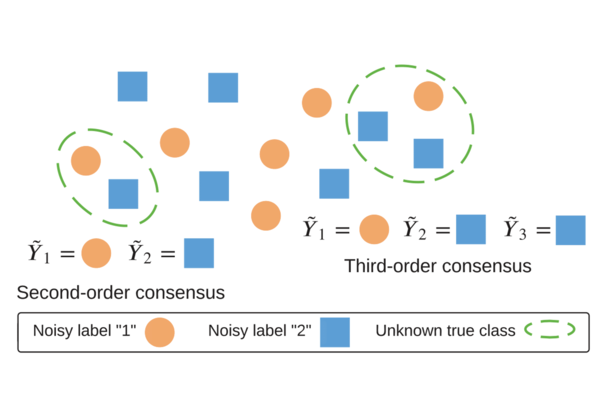

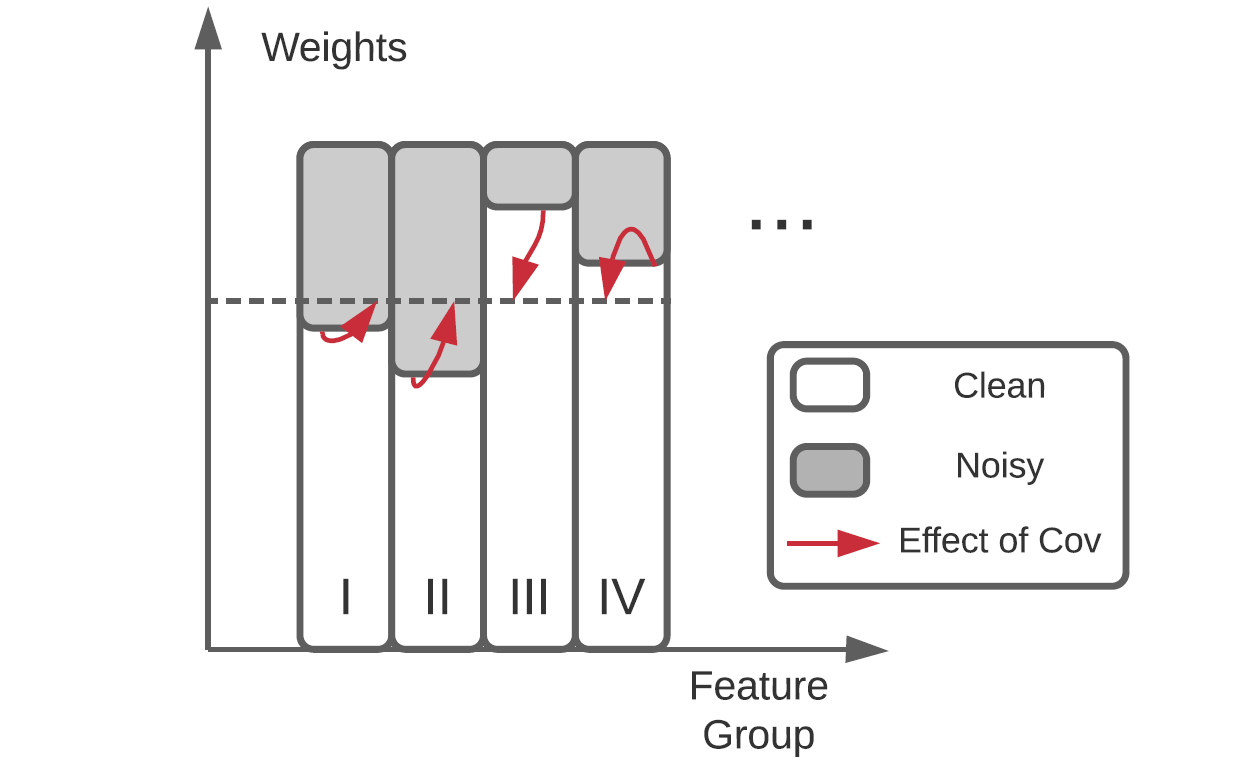

I am currently a researcher at Docta.ai. My work focuses on data-centric AI, large language models (LLMs), and advancing responsible, explainable, and trustworthy AI. I continue to explore weakly-supervised learning techniques (including handling label noise, semi-supervised, and self-supervised learning), fairness in machine learning, and federated learning. I am particularly interested in addressing the biases present in machine learning datasets and algorithms.

I received my Ph.D. from the University of California, Santa Cruz, in 2023, where I was advised by Professor Yang Liu. Prior to UCSC, I received the B.S. degree from the University of Electronic Science and Technology of China (UESTC), Chengdu, China, in 2016, under the supervision of Prof. Wenhui Xiong, and received the M.S. degree (with honor) from ShanghaiTech University, Shanghai, China, under the supervision of Prof. Xiliang Luo.

Publication Google Scholar